regularization machine learning meaning

It is a form of regression that shrinks the coefficient estimates towards zero. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting.

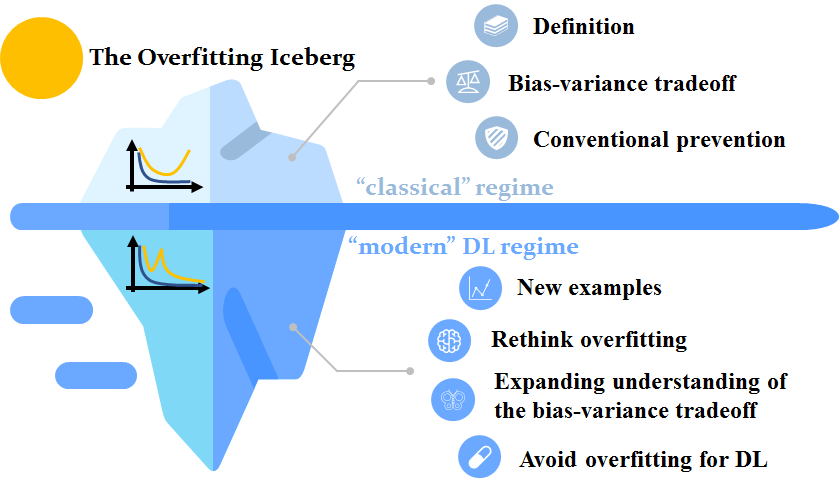

4 The Overfitting Iceberg Machine Learning Blog Ml Cmu Carnegie Mellon University

It is one of the most important concepts of machine learning.

. In general regularization means to make things regular or acceptable. As data scientists it is of utmost importance that we learn. Sometimes one resource is not enough to get you a good understanding of a concept.

In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of. Regularization supports minimizing the errors by appropriately fitting the functions on the training data decreases the possibility of overfitting. Answer 1 of 37.

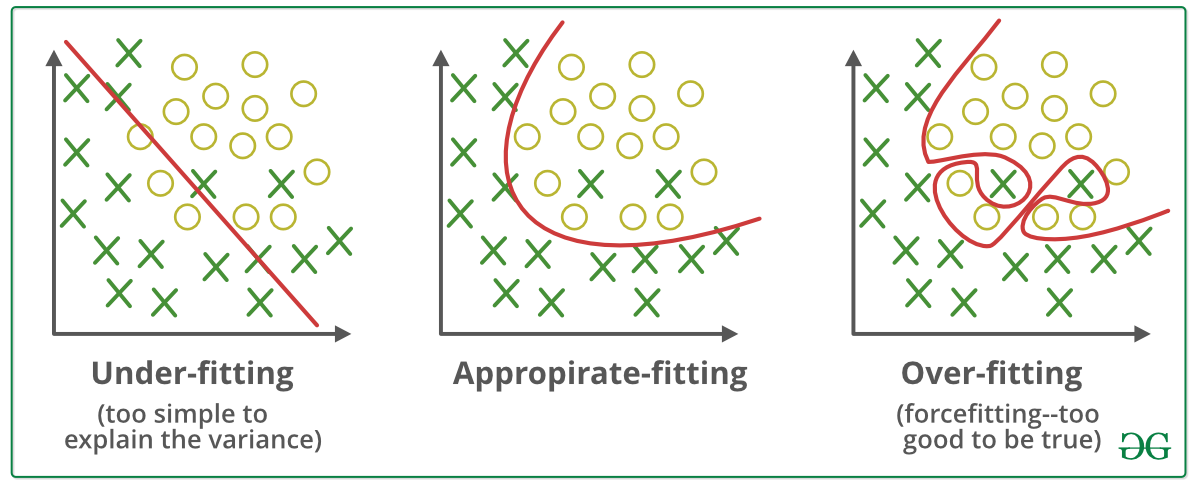

What is regularization in machine learning. This occurs when a model learns the training data too well and therefore performs poorly on new data. In statistics particularly in machine learning and inverse problems regularization is the process of adding information in order to solve an ill-posed problem or to prevent overfitting.

L2 regularization It is the most common form of regularization. In this article titled The Best Guide to. Regularization in Machine Learning.

To avoid this we use regularization in machine learning to properly fit a model onto our test set. In simple words regularization discourages learning a more complex or flexible model to. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

Every machine learning algorithm comes with built-in assumptions about the data. This technique prevents the model from overfitting by adding extra information to it. Regularization in Machine Learning is an important concept and it solves the overfitting problem.

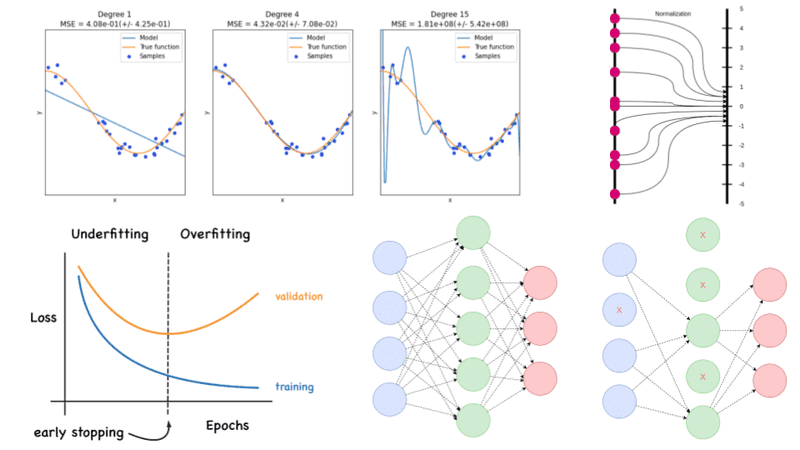

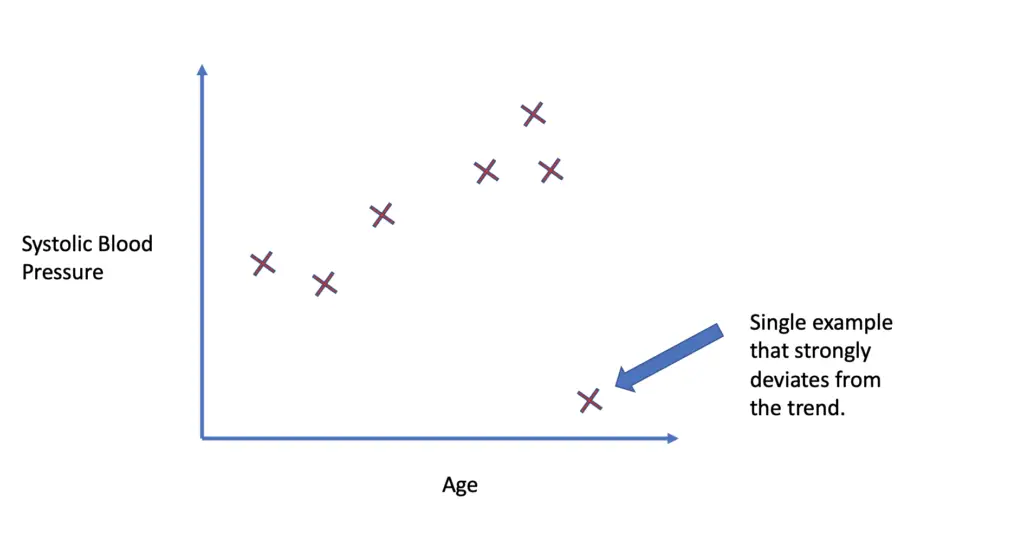

Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data. Regularization is one of the techniques that is used to control overfitting in high flexibility models. Instead of beating ourselves over it why not attempt to.

I have learnt regularization from different sources and I feel learning from different. It is very important to understand regularization to train a good model. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

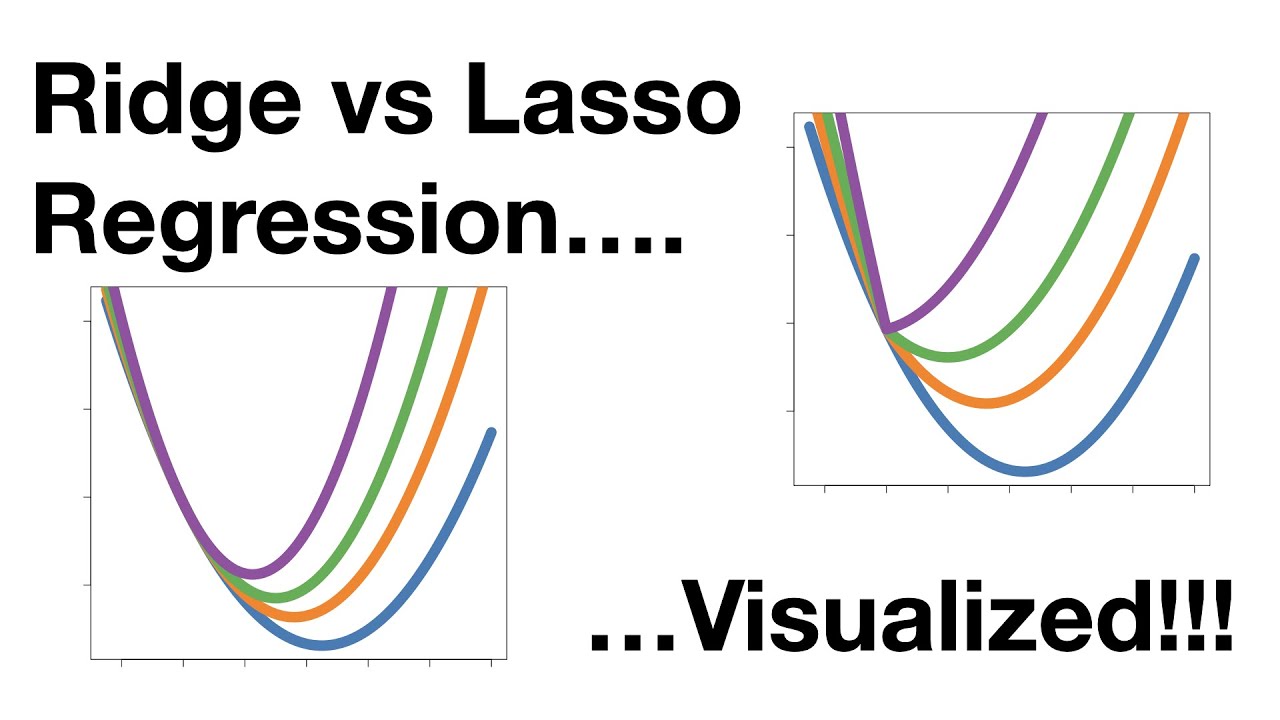

L1 regularization adds an absolute penalty term to the cost function while L2 regularization adds a squared penalty term to the cost function. The concept of regularization is widely used even outside the machine learning domain. Regularization is a type of technique that calibrates machine learning models by making the loss function take into account feature importance.

The second term is new this is our regularization penalty. We can see that our data is in our desired range of 0 and 1 with mean 014. Regularization techniques help reduce the chance of overfitting and help us get an optimal model.

Regularization achieves this by introducing a penalizing term in the cost function which assigns a higher penalty to complex curves. While regularization is used with many different machine learning algorithms including deep neural networks in this article we use linear regression to. It penalizes the squared magnitude of all parameters in the objective function calculation.

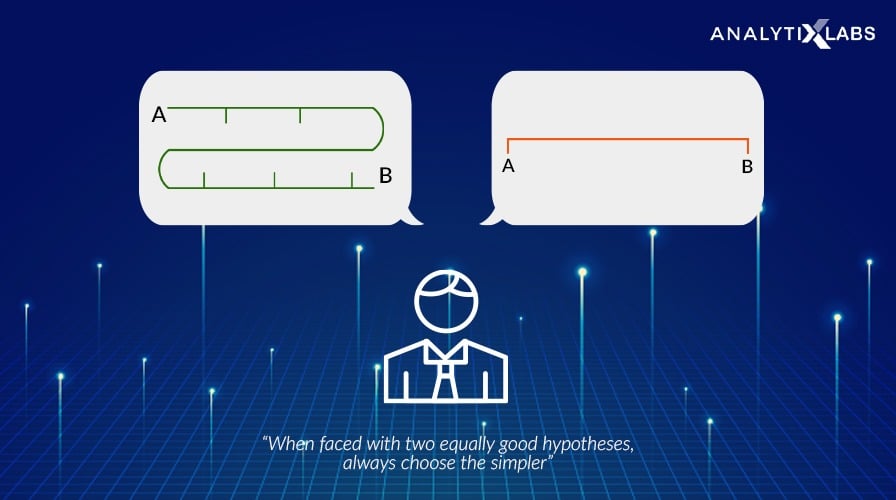

Regularization is a concept by which machine learning algorithms can be prevented from overfitting a dataset. This article focus on L1 and L2 regularization. Regularization is an application of Occams Razor.

L2 regularization makes estimation for the mean of the data in order to evade. The λ variable is a hyperparameter that controls the amount or strength of the regularization we are applying. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting.

For every weight w. This happens because your model is trying too hard to capture the noise in your training dataset. A regression model which uses L1 Regularization technique is called LASSO Least Absolute Shrinkage and Selection Operator regression.

Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. A regression model. The model will have a low accuracy if it is overfitting.

It is also considered a process of adding more information to resolve a complex issue and avoid over. Also it enhances the performance of models for new inputs. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

Regularization is a technique which is used to solve the overfitting problem of the machine learning models. One of the major aspects of training your machine learning model is avoiding overfitting. While training a machine learning model the model can easily be overfitted or under fitted.

In practice both the learning rate α and the regularization term λ are the hyperparameters that youll spend the most time tuning. Regularization applies to objective functions in ill-posed. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

This is exactly why we use it for applied machine learning. In some cases these assumptions are reasonable and ensure good performance but often they can be relaxed to produce a more general learner that might p. By noise we mean the data points that dont really represent.

In general regularization involves augmenting the input information to enforce generalization. As seen above we want our model to perform well both on the train and the new unseen data meaning the model must have the ability to be generalized. In machine learning regularization is a technique used to avoid overfitting.

It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one. It is a technique to prevent the model from overfitting by adding extra information to it. There are essentially two types of regularization techniques-.

Regularization is one of the most important concepts of machine learning. We can regularize machine learning methods through the cost function using L1 regularization or L2 regularization. Implicit Regularization is the most important form of regularization its implication in the process of machine learning in the modern times is paramount.

It means the model is not able to predict the output when. Regularization helps to reduce overfitting by adding constraints to the model-building process. Regularization is a technique to reduce overfitting in machine learning.

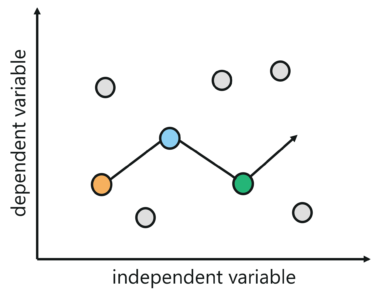

Intuitively it means that we force our model to give less weight to features that are not as important in predicting the target variable and more weight to those which are more important.

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube

What Is Regularization In Machine Learning Techniques Methods

Regularization Techniques For Training Deep Neural Networks Ai Summer

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

Regularization In Machine Learning Regularization In Java Edureka

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

Regularization In Machine Learning Geeksforgeeks

Regularization In Machine Learning Simplilearn

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

What Is Regularization In Machine Learning

Regularization In Machine Learning Programmathically

Regularization Of Neural Networks Can Alleviate Overfitting In The Training Phase Current Regularization Methods Such As Dropou Networking Connection Dropout

What Is Regularization In Machine Learning Techniques Methods

Implementation Of Gradient Descent In Linear Regression Linear Regression Regression Data Science

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Programmathically

Regularization In Machine Learning Regularization In Java Edureka